New trend appears in psychiatric hospitals. People in the crisis come with fake, sometimes dangerous beliefs, grandiose misconceptions and paranoid thoughts. The shared thread connects them: Marathon talks with AI Chatbots.

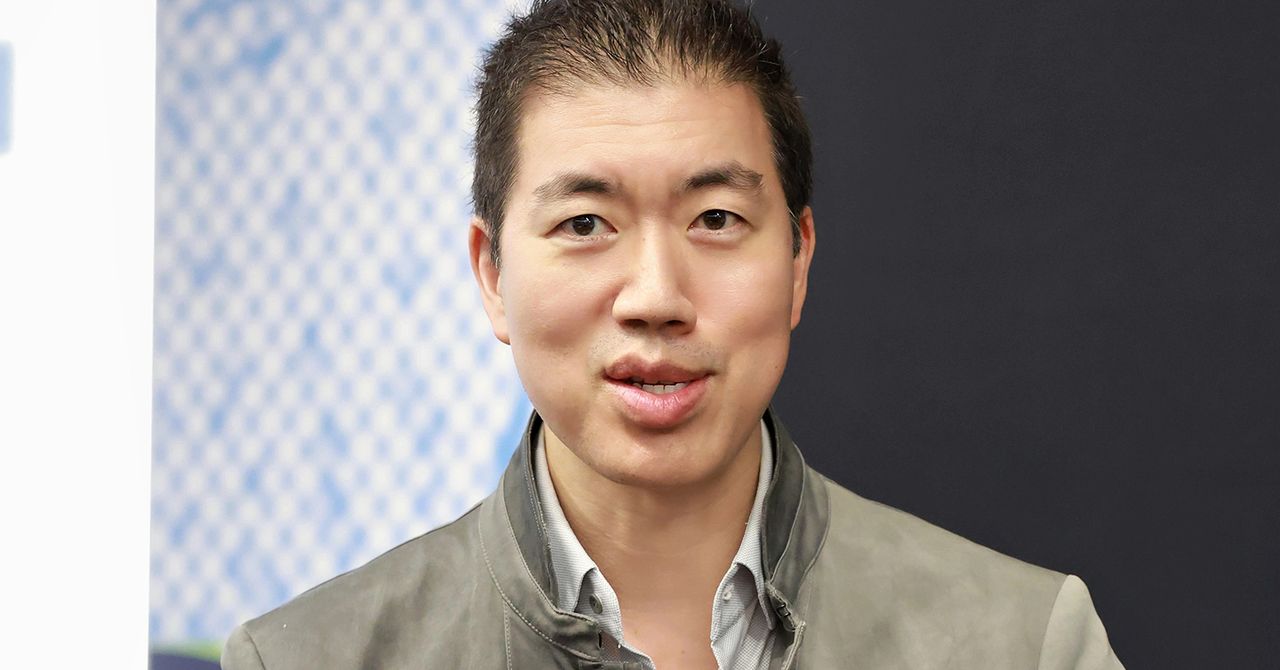

The wired spoke with more than a dozen psychiatrists and researchers, who are increasingly worried. In San Francisco, the Keith Sakat Psychiatrist says that he counted on a dozen cases sufficiently serious to strengthen hospitalization this year, cases in which artificial intelligence “played a significant role in their psychotic episodes.” As this situation takes place, the capture definition: “Ai psychosis” is taken into the headlines.

Some patients insist that the bots are a trademark or turning new great theories of physics. Other doctors say patients are locked in days back with tools, who come to hospital with thousands of pages of rewriting, in detail the bots supported or strengthened obviously problematic thoughts.

Reports like these are the accumulation, and the consequences are brutal. Incorrect users and family and friends described spirals that led to lost jobs, smoked relationships, forced receptive hospitals, prison weather, and even death. However, clinicians say that the wired medical community is divided. Is that a distinct phenomenon that deserves your own label or a familiar problem with a modern trigger?

And psychosis is not recognized by the clinical label. However, the phrase spreads in news and social media as a decisite for catching a kind of mental health crisis after a long chatbot conversation. Even the industry leaders are invited to discuss many mental health problems that are connected to AI. In Microsoft Mustafa Suleyman, the director and the Tech Giant AI division, warned the “risk of psychosis” for the post last month. Sakata says that the pragmatic and uses the phrase with people who are already working. “It is useful as an abbreviation for discussion on the actual appearance,” says a psychiatrist. However, it is quick to add that the term “may be misleading” and “risk concluding complex psychiatric symptoms”.

It is too simplification of what is concerned with many psychiatrists starting with the problem.

Psychosis is characterized as a departure from reality. In clinical practice, this is not a disease, but a complex “symptoms, including halucinations, thoughts, and cognitive difficulties,” says James Maccabe, a professor at the Department of Study at King’s College London. It is often associated with health conditions such as schizophrenia and bipolar disorder, although episodes can be initiated with a wide range of factors, including extreme stress, substance and denial use.

But according to Maccabe, cases and psychosis are almost exclusively focused on misconceptions – strongly held, but false beliefs that cannot be shaken by contradictory evidence. Although some cases can meet the criteria for a psychotic episode, Maccabe says that “there is no evidence” that AI has an impact on other characteristics of psychosis. “It’s just misconceptions that they affect their interaction with AI.” Other patients who report mental health problems after switching on chatbots, maccular notes, misconceptions, without any other features of psychosis, a condition called delusion.