Original version from This story appeared in How many magazines.

Chinese and the Deepseek company announced Chatbot earlier this year called R1, which pulled huge attention. Most focused on the fact that the relatively small and unknown company said that it was built by Chatbot who has monitored the effects of those from the world’s most famous companies and the use of partnerships and expenses. As a result, the stocks of many Western technological companies fell; Nvidia, which sells chips that run the leading AI models, lost more stock values in one day than any company in history.

Some of the attention involved the accusation element. Sources are alleged that Deepseek received, without permission, knowledge from own models O1 O1 using the technique known as distillation. Much of the news frame this opportunity as a shock industry and, which implies that Deepseek revealed a new, more efficient way to build ai.

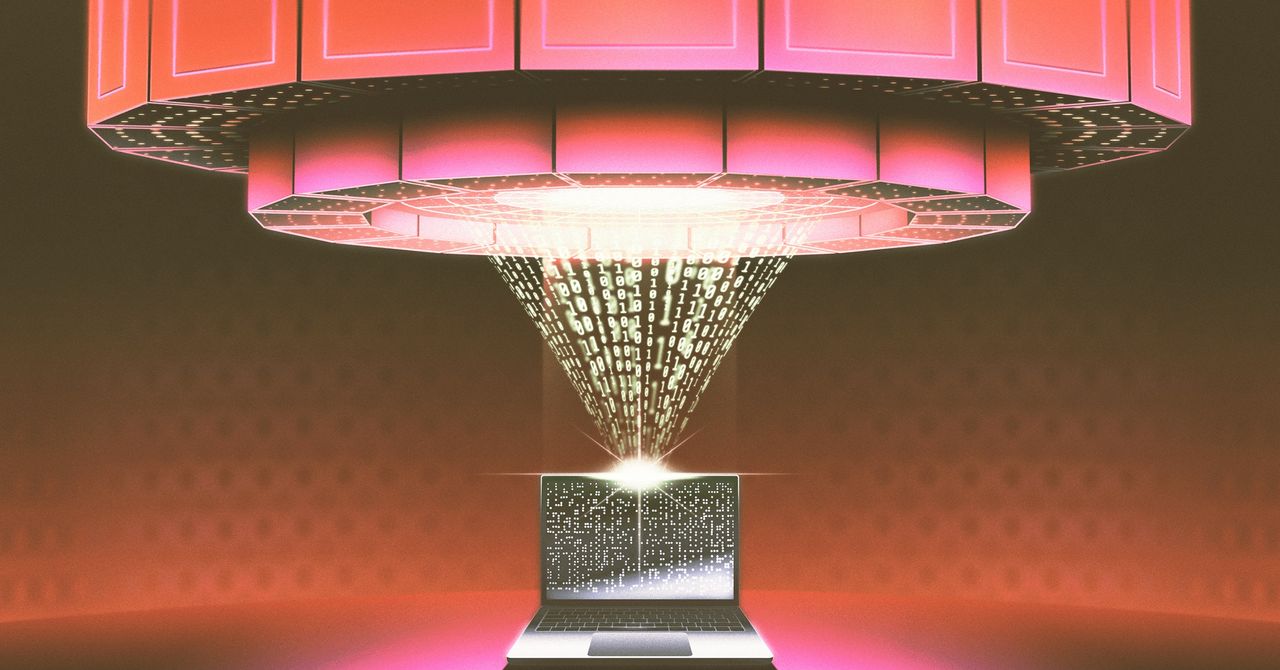

But the distillation, called knowledge, is a widely used tool in AI, the topic of computer science research that returns to a decade and a tool that is used by large technical companies on their own models. “Distillation is one of the most important tools that companies today have models to make more efficiently,” said Enric Boix-Adsera, researcher who studying distillation at the School of Wharton University in Pennsylvania.

Dark knowledge

The idea of distillation began with paper for 2015. year by three researchers on Google, including Geoffrey Hinton, the so-called Kum AI and 2024 Nobel Laureata. Then the researchers often run the Ensemble models – “Many models are glued together,” said Oriol Vinyals, the main scientist on Google Deepmind and one of the authors of paper – to improve their effect. “But it was incredibly awkward and expensive to start all models in parallel,” Vinyals said. “We intrigued with the idea of distilling to give it to one model.”

Researchers thought I could make progress solving a significant weak point in machine learning algorithms: wrong answers were considered as bad, no matter how wrong it might be. As part of the image classification, for example, “confusing dog with a fox penalized in the same way as a confusing dog with pizza,” Vinyals said. The researchers suspected that the Ensemble Models contain information that the wrong answers were less bad than others. Perhaps a smaller model “Student” could use data from the Great “Model” to understand the categories faster, which should have sorted images in. Hinton called this “dark knowledge”, referring to the analogy of cosmological dark matter.

After discussing this possibility with Hinton, Vinyals has developed a way to get a large teacher model to transfer more information on the image categories to a smaller student model. The key is in the household in “soft goals” in the teacher model – where the probabilities are assigned for each possibility, not solid answers. One model, for example, calculated that there is 30 percent of the chance that the picture showed a dog, 20 percent to showed the cat, 5 percent to showed a cow and 0.5 percent to show the car. By using these probabilities, the teacher model effectively discovered the student that dogs are quite similar to cats, not so different from cows and quite different from cars. The researchers found that this information would help the student learn how to recognize images of dogs, cats, cows and cars more efficiently. A large, complicated model could reduce on a skinny one with barely any loss of accuracy.

Explosive growth

The idea was not the current hit. The work was rejected from the conference, and Vinyals, discouraged, turn to other topics. But Distillation arrived in an important moment. Arms, engineers have discovered that larger data on training were fed into neural networks, the more efficiently became these networks. The size of the model soon exploded, as well as their possibilities, but the costs of leaders who climbed into step with their size.

Many researchers turned into distillation as a way to make smaller models. Google Researchers in 2018. years presented a powerful language model called Bert, which company soon began to use to help search web search. But Bert was great and expensive to run, so the next year, other developers distilled a minor version reasonably named Distilbert, who became a job and research. Distillation gradually became ubiquitous, and is now being offered as a company service such as Google, Openai and Amazon. Original distillation paper, which is still published only on the Arxiv.org Pretprint server, is now listed more than 25,000 times.

Given that distillation requires access to the interior of the teaching model, it is not possible for the third party to be annoyed in order to begin data from the closed code model such as O1 O1, because they were considered O1, because O1 O1 was considered. This was said, the student model could continue to learn much from the teacher model only through the encouragement of teachers with certain issues and the use of responses for trained models – almost Socratic access to distillation.

Meanwhile, other researchers continue to find new applications. In January, the Novasni Labelatory showed that distillation work well for the training models of formal reasons, which use multistage “thinking” to better respond to complex questions. The laboratory says that his fully open source Sky-T1 model costs less than $ 450 for training, and achieved similar results in a much larger model of open source. “We were truly surprised so good distillments in this environment,” Dacheng Lee said, Berkeley doctoral student and a judge in the Novaskajski team. “Distillation is a fundamental technique in AI.”

Original story Reprinted with permission from How many magazines, Editorial independence Simons Foundation Whose mission is to improve public understanding of science covering research development and trends in mathematics and physical and life sciences.